Managing documents versions is something what sooner or later will hit each company which has at least a tiny bit of touch with production.

Obviously when Your company primary business is software You are probably already using a kind of Source Version Control System. It may be good old CSV, it may be SubVersion and it may be, nowadays most popular: GIT. Or maybe something else? I don’t know.

GIT + GitBlit

I do use this combo. I do use GIT command line for my software on my workstation. And I use GitBlit as a web-based server for my works.

GitBlit

GitBlit is really a very good server software. It is lightweight, takes less than 15 minutes to get running, can run on Windows or Linux and You can have as many instance of it running on one machine as You like without any fancy stuff like VM or dockers. And doesn’t need any kind of external database, what is good for backing it up.

Setting it up and maintenance cost

It can easily handle two instances on dual core 1GHz + 1GB ram PC and takes just few tens of MB on disk. Configuration is done through very well commented text files, so it is not a problem. It is pure JAVA, so it is rock hard. Thanks You guys at OpenJDK, JGit, Tomcat and the entire open source community!

This is very easy to create a separate “production” server (for work) and “testing” server (for training) and make them look differently. Since GitBlit is self-contained making “testing” to be duplicate of “production” is a simple process of copying all files from “production” folder to “testing” folder and adding some override for some configuration options.

Oh, by the way, the entire process of migrating server from Windows to Linux was just… copying the folder with server from one machine to another. All right, all right, I am not entirely honest with You here. I also spent a whole day on learning how to make the systemd to start those servers, stop and back-up automatically when I push the “power” button on my tiny server. Mostly because I was still in SystemV init era.

GitBlit also (v1.8, I am not sure for 1.9) requires that You should stop it from time to time and run git gc on every repository on the server. Since I do an incremental backup daily when server powers-up (I just push the power button and let systemd to handle it) there was no big problem to add some scripts to do it. My server is a plain headless PC, so I power it up when I come to work and power it down when last person leaves to home. This is a temporary solution because this is a very beaten up PC and I don’t trust it to keep running 24/7. And honestly… there is no need to do it. We don’t work neither at night nor remotely.

Backing up GitBlit is easy. I just stop server and copy the server folder to USB disk with standard Liunux incremental backup tools.

It has GitLFS (GIT Large File System), but without locks. After trying it out I have found that there is no much benefit from LFS except locks and that it puts greater load on server due to lack of differential compression. Also the regular GIT is specified at file format level, while LFS is specified at protocol level. It means, that if my server will totally crash I can always copy non-LFS repositories to other machine and use them with any GIT implementation. With LFS it is not true, as file format is server implementation specific.

So now I don’t use LFS at all, even for large binary files. There is not need for it.

Tuning it and fixing bugs

On the downside the GitBlit source code quality is rather poor, but it doesn’t stand out much from other open source projects. I was able to modify it to my needs without a great hassle.

The source is however not fully self contained and compilation environment practically can’t be set up without an internet connection due to nightmarish net of dependencies. Due to that I am worried about how long I will be able to maintain it if the community project would die, but after a bit investigation I have found that nowadays almost every open source project is so interlaced with each other that getting Your hands on the complete source code base is hardly possible.

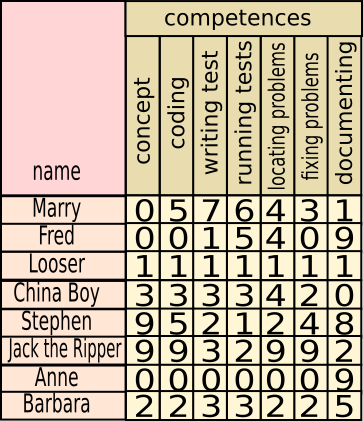

Once piece to another, if You are good with Java You will be able to fix most problems with code by Yourself. I fixed some problems with showing gigabyte size commits with tens of thousands of files in one push, and added some better logging facility. I think next what I will add it will be an ability to see per-user activity (I am a low level manager so I would like to check what who have been doing and when) and full text search with Tika decoding. I miss this last functionality miserably. I really need to search through at least PDF.

GitBlit user experience

From user point of view GitBlit is a browser based viewer for GIT repositories plus a system to manage a kind of web-forum for each repository. This can be used to make “to-do” notes, make some requests and fill bug reports.

It lacks many features, like ability to make commits through web, full text search across non-text files (works with raw text files tough), adding attachments to posts on forums and such like.

The user experience with browsing repositories is very alike browsing folders on Your PC. You just select branch, commit or tag and click “tree”. And here You go, You have Your repository as it was at that day. Like to have file downloaded? Right-click on “raw”. Like to have full folder or repository snapshot on Your PC? Click “zip” and download it. No need to clone anything with git command line tools.

Since all page addresses are static You may just bookmark a version You need and use it. You can e-mail it to others, but You may also restrict repository access in such a way, that not everybody will be able to see it. You can’t however set permissions on per-branch basis, what makes it a bit tricky to use the server as a “distribution platform”. Well…. You just need to teach users to use tags to get to their release versions or have a separate “public release” repository.

The tracking of who changed what in GIT is, in generic, very easy to crack. It is possible to forge commit signed with somebody else name because author identification in commits is declarative. You can force GIT to use PGP digital signatures, but sadly GitBlit does not support validation of digital signatures. But honestly… if one of Your key designers in Your company will decide to destroy You then You are boned. He/she will do it regardless of anything You can think about. All what You will be able to do it is to sue him/her later.

Oh, btw. I could not convince GitBlit to reject push --force what is a straight path to oblivion.

Command line + GitAhead

This is getting a bit trickier when You need to stop being just passive user who can browse, download and post on forums and You must become a user who commits. Learning some client side tools is necessary. I have chosen command-line, but for some of members of my team who are not programmers I have chosen GitAhead as a most entry level and most user friendly solution I could have found. This project is stale, but works and is very, very easy to learn. You can’t do everything with it, but at entry level a GUI which can do everything is a source of problems not solutions.

And what has Autodesk Vault to do with it?

GIT is for source code, right?

But what if You also have other files?

I personally create plenty of Inkscape files, html files, Open Office files, CAD files (in many programs), 3D printing files, photos and even sound files.

So I need an ability to keep them somewhere, track their history and share them in an easy way.

Commercial solution

Since the mechanical CAD I use at work is Autodesk Inventor Pro the company I work for decided to provide all mechanical designers with some version control software.

The off-the-shelf ready solution is Autodesk Vault. Of course it is Windows only, GUI only solution. No command line, so no scripts to make Your work easier.

When we decided to put our mechanical CAD files into Vault, we also thought: “why not to put other files there too?”

Since I also do programing job the first question I asked Autodesk reseller who was providing us with Vault: “Can I keep my source code there?”.

The answer was plain: “You will be sorry if You try it”.

And the reseller was right. Vault is not powerful enough to deal with source code.

What is Vault, technically?

Versioning

Technically it is like one, huge CSV repository. It tracks history of files, each one separately.

The history is linear, there is no such thing like branches.

Each time You like to edit file, You must “check it out” from the server and You can “check out” only the latest version. Only one user may have file “checked out” at the time, so there is per-file conflict protection.

Organizing it

You can organize files in folders, but the file history does not store information how it moved in a file tree. If in version 1.0 it was in folder V:\myjob1 and in version 2.0 You moved it to V:\finallydone then when You will ask Vault to provide You with file in version 1.0 it will put it… in V:\finallydone.

Do I have to tell You what it does with paths in dependencies?

From practical point of view it means, that You can’t make any cleanup in Your project, because any process of moving files will alter all versions, current and historical. Efficiently it may even result in broken history if You will just exchange two files with same name but in different folders, while Your third file will reference to them by path something like below may happen.

Version 1.0

file "A" references file "V:\boo\a.txt",

where a.txt contains text "marakuja" and has unique ID XXXX1

file "B" references file "V:\moo\a.txt",

where a.txt contains text "borakuja" and has unique ID XXXX2

With version 1.1 You changed texts to “marakuja+” and “borakuja+”.

Then Version 2.0 You did realize, that by mistake, You named Your folders incorrectly. So You will tell Vault, using Vault GUI, to move file “boo\a.txt” to “moo\a.txt” and vice versa. Of course You also updated paths in “A” and “B” so it now looks like:

file "A" references file "V:\moo\a.txt",

where a.txt contains text "borakuja+" and has unique ID XXXX2

file "B" references file "V:\boo\a.txt",

where a.txt contains text "marakuja+" and has unique ID XXXX1

Vault history tracking does not store the information about the fact, that You moved Your files, even if You will do this move through the Vault GUI. If You do it inside Your work folder it won’t even try to detect that You moved files, what in that case will be correct behavior. The Vault GUI will just update the database to tell that file with unique ID such and such is at such and such folder. It won’t save it in history of that file.

So if next time You will look at history for version 1.0 You will see:

Version 1.0

file "A" references file "V:\boo\a.txt",

where a.txt contains text "borakuja" and has unique ID XXXX2

file "B" references file "V:\moo\a.txt",

where a.txt contains text "marakuja" and has unique ID XXXX1

what is simply not right.

The same rule applies to deleting files. If Your delete file with unique ID XXXX2 it is deleted from the database. With all the history. You just can’t delete file which is no needed in Version 3.0, because it is needed in Version 1.0. It will be living in Your files folder forever

So it is just fucked up?

Mostly. But before sending it to the garbage bin let us inspect other functionalities.

More advanced organizing tools

You can assign “categories” to files, but the process of categorization is based not on “MIME magic” but just on file extension.

Since Vault is just one huge repository there is no easy way to get a “snapshot” of Your working folder and restore entire folder to specific date in bulk (well… it is, but it is broken beyond imagination, so I will skip it).

Autodesk decided to help You with it in two ways: by allowing You to “attach files” to each other and by allowing it to create so called “items”.

And here money comes to play.

The only organizing tool which can clip together files preserving their version are “items”. And “items” are available only in most expensive subscription plan.

“Items” are just a flat list of names which allows You to bunch files together to make a consistent “snapshot”. This list grows rapidly and there is no such view like “tree” in it.

“Items” do have own history and do correctly track what version of file is attached to what version of an item. So You may use “items” to bunch up Your files.

In fact “items” are the primary elements of Autodesk Vault which are versioned, controlled, validated and etc.

Attachments do not track anything, at least I could not figure out how to use them in an easy and predictable way.

Browsing it

In practice the only way to get a consistent snapshot of a project is to use “items”, so this should be Your starting point. Sadly items are just a flat list, while files are presented in a form of a folders tree. So guess where any user starts from? Of course from files tree. And guess what user gets? The inconsistent snapshot.

And, by the way, You can’t diff Your files. This is Autodesk program dedicated for Autodesk CAD and it can’t… show You what have changed between versions. Yes, no diff. No diff. No diff.

Do I repeat my self?

No diff for Autodesk closed format files in Autodesk closed source program.

It is even funnier. Vault GUI itself can’t display Autodesk Inventor files even tough it is dedicated Inventor tool. It must ask Inventor to convert CAD file from Inventor format to DWF format, and when You ask Vault to show You the CAD file it shows You the DWF file instead. If You happen to not have Inventor on Your machine and there is no instance of Inventor running which advertises itself as a “job processor” then DWF file may be “stale”.

It did not took me long to look at the same file, in the same version, and see three different drawings. When I opened the CAD file with Autodesk Inventor I was seeing something else from what I have seen with Autodesk Inventor Viewer. And the DWF file, which should be in theory just the “visualization” of that CAD file was showing yet another drawing.

So this is the version control You get with Vault.

Gladly, and this is a bit of a paradox, Valut behaves better with non-Autodesk files. Providing there is no cross-file dependency. If You tend, just like me, to create OpenOffice documents which are linked to each other and linked to images, then You are boned

The Vault server is also equipped with so called “thin client” which is a web browser interface. It is not super easy to use, but for read-only access it is far better than normal GUI. And it does not consume a license so this is the only front-end for files distribution.

Can I use GIT with CAD files?

Sure, no problem. Git can handle any kind of files.

There are however three things You must know.

First GIT is conceptually distributed system which assumes that there is always an easy possibility to “merge” changes made to the same file by two persons. This is true for plain text files, like the program source files, but almost never true of JPG, CAD files and alike.

Second GIT and GIT servers do really try to show users difference between versions of the same file. Again this is possible for plain text files and rarely possible for closed format proprietary CAD files. This is always a bit of pain in the behind to convince GIT web servers to not show diffs for such files. GitBlit is not much better than others in this area.

And third, since GIT assumes to be distributed it has no system to prevent two users from working on the same file. Because it assumes, it is possible to merge those changes at low cost.

None of those three do work when You use GIT for closed format CAD files.

Is it a big problem?

No.

Basically the problem does not appear if just one person works on a repository at time. If You can ensure that by regular job management means You will have zero problems. Except lack of diff, but sorry, if Autodesk can’t diff their own files, how could GIT do it?

GIT will not help You in that manner, but it will detect if You screw up and will prevent conflicting updates. Sure, one of person in conflict will have to re-do his job again, but GIT will not allow those two works to clash.

Beyond version control

Both GIT and Vault are version control. GIT is infinitely better in that manner than Vault. And the price difference is outstanding.

But version control is not everything.

You need some formal change control. That is You need not only know what have changed, but You must know who have changed and who have verified it and who have accepted it.

A formal “request for change”.

Request for change and GIT

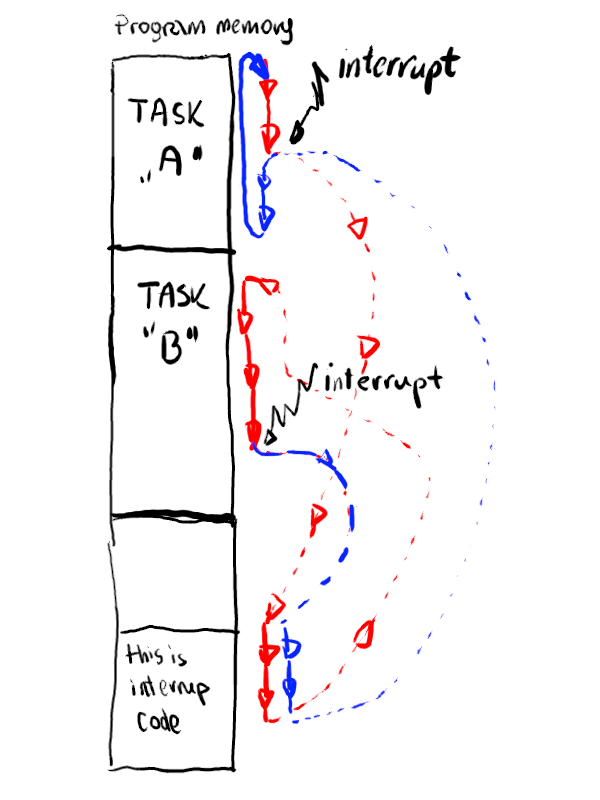

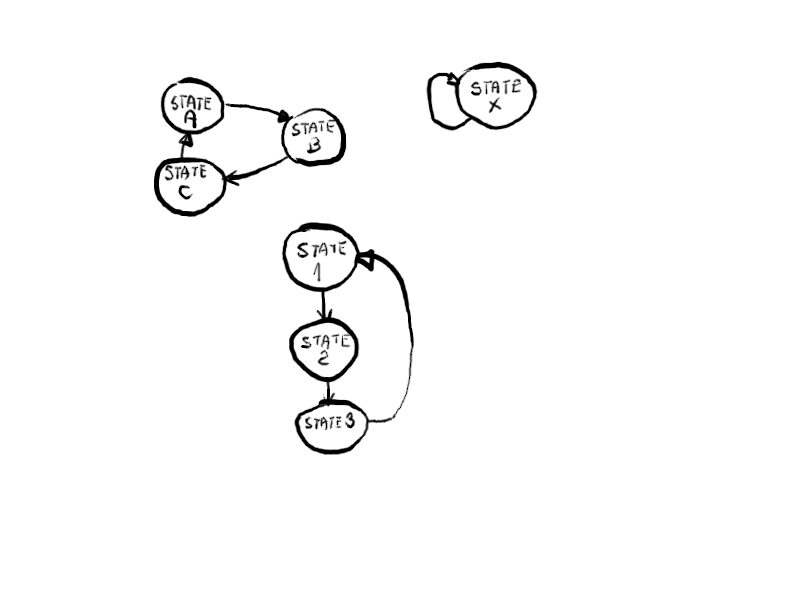

Bare GIT has no such support. None. Zero. When it is done it is usually done by having branches and moving files from branch to branch.

Request for change and Vault

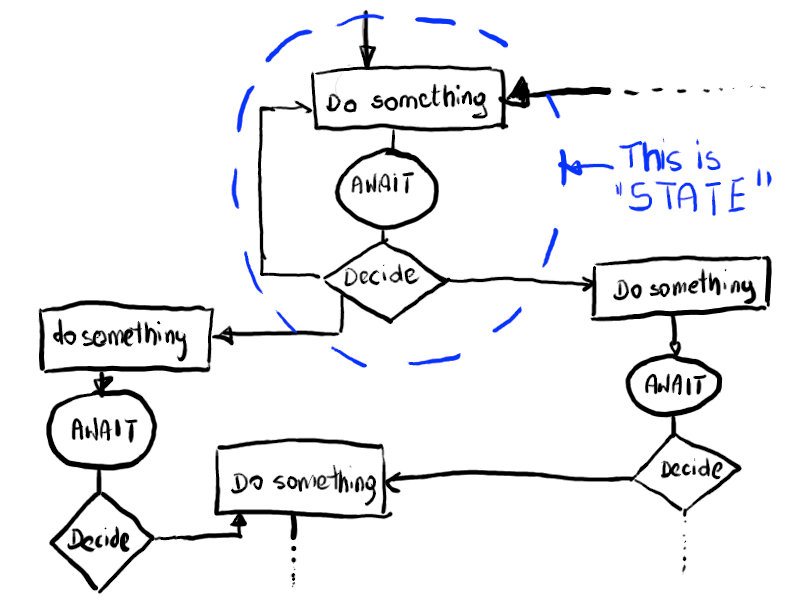

Vault does have it. This is good. The sequence of state transitions is however hard-coded in it and if Your formal control flow is not fitting it You can’t use it at all.

Even tough Vault calls it “change request” it is in fact “change processing”.

The differences is simple: making a “change request” is just like making a proposal: “Can You make it such and such?”. Such a proposal is connected to specific project, possibly in specific version, but the fact that somebody made a proposal does not mean the work must be done. It may be just dumb proposal. And it may hang around for a long time.

On the contrary “change processing” is tracking of what is actually being done and prevents two changes from conflicting.

In Autodesk Vault You open “change requests” for “items”. This is good, because item is tracking versions. But when You fill “change request” in Vault You are preventing other “change requests” to be open for the same “item”. And this is a blocker, because You can’t report more than one issue at one time.

So it is also definitely broken.

Request for change and GitBlit

GitBlit do have very simple change request system. With the per-repository forum (ticket system).

You just post on forum and can select a type for Your post (“question”, “bug” and many others). Each type of post has some set of possible states. There is not enough states for it to be a true formal system. If Your post requires some changes and change processing is done You may, although through command line, to ask GitBlit to create branch dedicated for handling this post. The server will then nicely catch this branch and attach it to the post.

This shows the difference between “change request” (when You create a post and discuss it) and “change processing” ( when You create a branch for a post and work on it).

GitBlit forum posts can have also votes. The simple voting system can be used to track who accepted something and who rejected it, but there is no hard barrier for change to be introduced to “formally good” branch of history.

The forum is however flexible enough so that You can track who had some objections and who have given a green light to proceed with it. The system won’t however protect You against misuse.

Oh, and there is e-mail notification system which is poorly documented but, surprisingly, works. Since I am more “phone person” than “e-mail person” and I check my e-mail once a week at most, I rarely use it.

Summary

The Git+GitBlit or in fact any other decent web GIT server with support for discussion forums, can handle a complete document management system providing that You are not especially picky about access control and You have some trust in Your employees.

There is absolutely zero problem with CAD files or other binary files, but some regular job management is necessary to avoid conflicts.

The price point of such system is unbeatable.

The cost of maintenance for Git at each workstation is the same as for LibreOffice, the cost of maintenance of GitBlit server is about one work hour per month, from my experience, and there are no licensing issues.

Git can be scripted, used from command line or with one of many, many available GUI on the market.

There is a plenty of free books in many languages and You will for sure find some printed ones in Your own language.

The learning curve is at first damn steep, then flat, then steep again. Basic activity requires about 16 hours of well prepared training. If You will prepare Your repositories well and arm them with scripts then most common actions can become single-clickers.

Vault is expensive, hard to set up, and has hidden licensing costs: Windows + MSAccess database are minimum requirements. And it is broken beyond repair. The only usable scenario is when You use it as file storage for “official versions”. Using it for tracing of daily work, even with Autodesk Inventor and alike programs it is dedicated for, is not a best idea – You will sooner or later return to making folders like “old”,”test1″,”newversion” and etc. due to lack of history branches. And forget about back-fixes, since You can’t check-out old version and can’t branch history.

Since in my mechanical designs I usually make three or four approaches restarting each time from zero with many returns and borrows Vault it completely useless for my daily work.

With Vault You have, at leas in theory because in practice it doesn’t work, a professional support.

Which You don’t have with Git+GitBlit, but You can easily get it with Git+GitLab.

I don’t recommend running GitLab on Your own server, because fine tuning it, backing it up and running more than one instance is a real pain when compared with 15-minute GitBlit. We have GitLab too and it is still, after 1 year, not working properly. And nobody knows why. It is just too powerful and too complex. We have about 40 users and GitLab is capable of handling tens of thousands of them. This is not a right scale for us.

If I were You I would GIT+GitBlit a try.

But if my payment would depend on how valuable tools I manage, what is typical at corporate managers level, then selecting a zero cost tools would be stupid.